Projects¶

This page contains project specific information concerning the test suite.

Open Clouds for Research Environments (OCRE)¶

Motivation¶

The test-suite is intended to be used to test and validate cloud services across the stack for research and education environments. The development of the test suite originates from the HNSciCloud project, where automation was lacking as tests deployments were executed manually, in a scattered manner with no result tracking capabilities. This Test-Suite is being used as a validation tool for cloud services procurement in European Commission sponsored projects such as OCRE and ARCHIVER. The testing and validation in the scope of OCRE will be used as part of the selection criteria for the adoption funds available in the project, resulting in a OCRE certification process for the 27 platforms involved in the project.

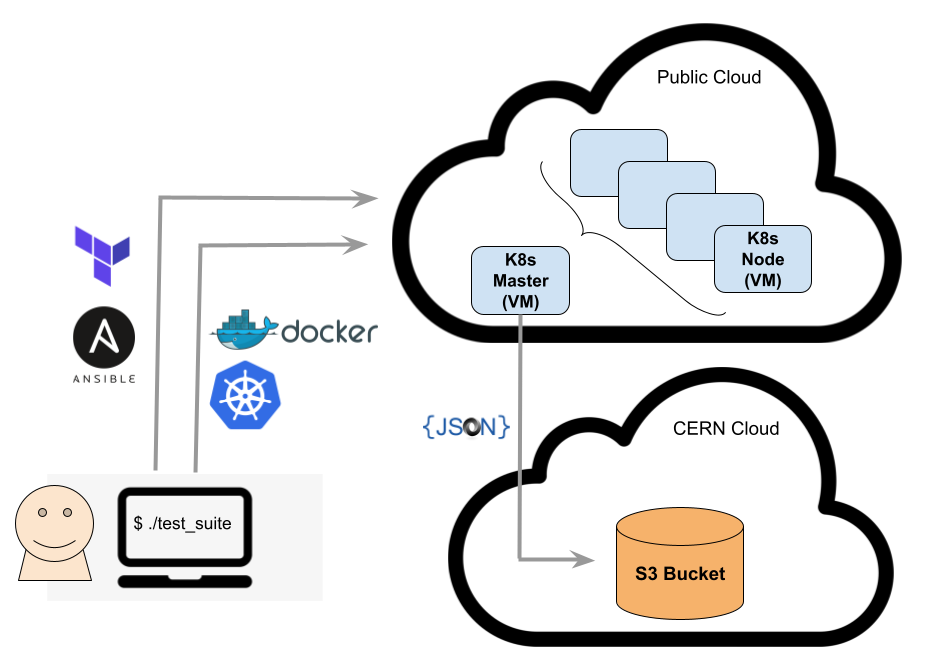

Below, an overview of the tool and overall test validation in the context of the OCRE project.

Scope of the Testing and Validation¶

The test suite is capable of evaluating different services across the cloud stacks:

- Networking - Network performance tests using perfSONAR.

- Standard CPU benchmarks - Runs of a set of High Energy Physics (HEP) specific workloads to benchmark CPU performance.

- Basic S3 storage features validation - Check the availability of the main S3 object store functionalities using AWS CLI.

- Basic Data Repatriation - Tests data backup exports from the cloud provider to the Zenodo repository service.

- Machine Learning - Set of benchmarks for single and multiple node algorithm training of advanced GAN models (NNLO, ProGAN and 3DGAN), on accelerator architectures (i.e. GPUs).

- DODAS check - DODAS is a scientific software framework running a test workload from one of the LHC experiments, CMS. Success running this test indicates the DODAS framework can generate clusters on demand using public cloud, for batch workload execution based on the HTCondor workload management system.

The Tests Catalog provides additional details about these tests.

The testing and validation in the scope of OCRE will be used as part of the selection criteria for the adoption funds available in the project.

Results¶

Results of the runs will be stored as JSON files on an S3 bucket on the CERN cloud’s CEPH service. OCRE consortium members will be able to access all results, whilst vendors only their own results.

To provide segregated access, pre-signed S3 URLs will be used. Each vendor will be provided a list of pre-signed URLs that should be used to obtain the result files. Automation of the download of those result files is possible. Please use this tool to do it.

The CERN development team is developing a dashboard that will parse the data from the JSON files, for a more user-friendly visualisation.

No tests results will be made public without agreement of the respective vendor.

The OCRE consortium aims to create a certification process for the platforms that are successfully validated. This process will be agreed and communicated to the respective vendors before being put in place.

In addition to the GÉANT contract management team, two CERN members shall be involved in the interactions:

- A CERN representative will handle all communication between the vendors technical representatives and the CERN developers.

- A CERN technical representative will be responsible for deploying and performing validation tests. In addition, he must have permissions to create additional local user accounts in the award subscription, in case other members need access to run or complete those tests.

Requirements¶

In order to perform multiple runs of the test set including the Machine Learning benchmarks, an modest subscription credit (5000€, valid for 6 months) is required for the full stack of services available in the platforms.

In case a platform does not offer accelerator architectures (i.e. GPUs, FPGAs or similar vendor specific) the required amount of credits can be lower.

The number/type of tests is expected to evolve during 2021 in areas such as HPCaaS and COSBench for S3. Any new additional test will be documented and this page updated. It shall not imply requests of additional credit.

Timeline¶

Access to the platforms should be provided to the testing team by the latest in mid April 2021. Results will start to be available between May and June 2021.

Main Technical Contacts¶

To handle communication effectively, please use the mailing list: cloud-test-suite AT cern.ch

The contact details of the CERN technical representative that will be responsible for deploying and performing validation tests will be provided later.

Licensing¶

The framework is licensed under AGPL. Tests included might have their specific licenses. For more details, please refer to the Tests Catalog.